PGDIteration

- class deepinv.optim.optim_iterators.PGDIteration(**kwargs)[source]

Bases:

OptimIteratorIterator for proximal gradient descent.

Class for a single iteration of the Proximal Gradient Descent (PGD) algorithm for minimizing \(f(x) + \lambda g(x)\).

The iteration is given by

\[\begin{split}\begin{equation*} \begin{aligned} u_{k} &= x_k - \gamma \nabla f(x_k) \\ x_{k+1} &= \operatorname{prox}_{\gamma \lambda g}(u_k), \end{aligned} \end{equation*}\end{split}\]where \(\gamma\) is a stepsize that should satisfy \(\gamma \leq 2/\operatorname{Lip}(\|\nabla f\|)\).

Examples using PGDIteration:

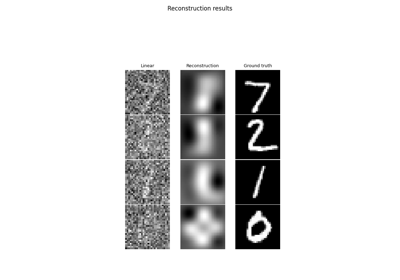

Learned Iterative Soft-Thresholding Algorithm (LISTA) for compressed sensing

Learned Iterative Soft-Thresholding Algorithm (LISTA) for compressed sensing