SupAdversarialGeneratorLoss

- class deepinv.loss.adversarial.SupAdversarialGeneratorLoss(weight_adv: float = 0.01, D: Module | None = None, device='cpu', **kwargs)[source]

Bases:

GeneratorLossSupervised adversarial consistency loss for generator.

This loss was used in conditional GANs such as Kupyn et al., “DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks”, and generative models such as Bora et al., “Compressed Sensing using Generative Models”.

Constructs adversarial loss between reconstructed image and the ground truth, to be minimised by generator.

\(\mathcal{L}_\text{adv}(x,\hat x;D)=\mathbb{E}_{x\sim p_x}\left[q(D(x))\right]+\mathbb{E}_{\hat x\sim p_{\hat x}}\left[q(1-D(\hat x))\right]\)

See Imaging inverse problems with adversarial networks for examples of training generator and discriminator models.

Simple example (assuming a pretrained discriminator):

from deepinv.models import DCGANDiscriminator D = DCGANDiscriminator() # assume pretrained discriminator loss = SupAdversarialGeneratorLoss(D=D) l = loss(x, x_net) l.backward()

- Parameters:

weight_adv (float) – weight for adversarial loss, defaults to 0.01 (from original paper)

D (torch.nn.Module) – discriminator network. If not specified, D must be provided in forward(), defaults to None.

device (str) – torch device, defaults to “cpu”

- forward(x: Tensor, x_net: Tensor, D: Module | None = None, **kwargs) Tensor[source]

Forward pass for supervised adversarial generator loss.

- Parameters:

x (Tensor) – ground truth image

x_net (Tensor) – reconstructed image

D (nn.Module) – discriminator model. If None, then D passed from __init__ used. Defaults to None.

Examples using SupAdversarialGeneratorLoss:

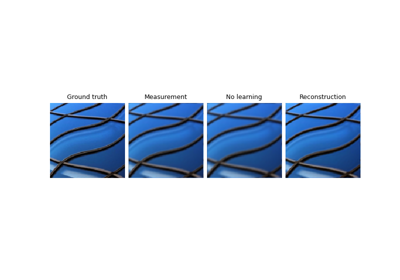

Imaging inverse problems with adversarial networks