UnsupAdversarialDiscriminatorLoss

- class deepinv.loss.adversarial.UnsupAdversarialDiscriminatorLoss(weight_adv: float = 1.0, D: Module | None = None, device='cpu')[source]

Bases:

DiscriminatorLossUnsupervised adversarial consistency loss for discriminator.

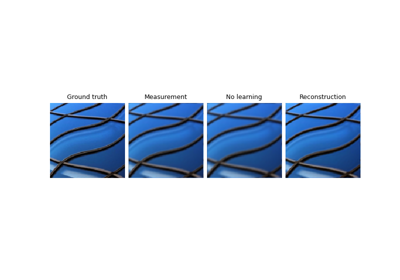

This loss was used in unsupervised generative models such as Bora et al., “AmbientGAN: Generative models from lossy measurements”.

Constructs adversarial loss between input measurement and re-measured reconstruction, to be maximised by discriminator.

\(\mathcal{L}_\text{adv}(y,\hat y;D)=\mathbb{E}_{y\sim p_y}\left[q(D(y))\right]+\mathbb{E}_{\hat y\sim p_{\hat y}}\left[q(1-D(\hat y))\right]\)

See Imaging inverse problems with adversarial networks for examples of training generator and discriminator models.

- Parameters:

weight_adv (float) – weight for adversarial loss, defaults to 1.0

D (torch.nn.Module) – discriminator network. If not specified, D must be provided in forward(), defaults to None.

device (str) – torch device, defaults to “cpu”

- forward(y: Tensor, y_hat: Tensor, D: Module | None = None, **kwargs)[source]

Forward pass for unsupervised adversarial discriminator loss.

- Parameters:

y (Tensor) – input measurement

y_hat (Tensor) – re-measured reconstruction

D (nn.Module) – discriminator model. If None, then D passed from __init__ used. Defaults to None.

Examples using UnsupAdversarialDiscriminatorLoss:

Imaging inverse problems with adversarial networks