unfolded_builder

- deepinv.unfolded.unfolded_builder(iteration, params_algo={'lambda': 1.0, 'stepsize': 1.0}, data_fidelity=None, prior=None, max_iter=5, trainable_params=['lambda', 'stepsize'], device=device(type='cpu'), F_fn=None, g_first=False, bregman_potential=None, **kwargs)[source]

Helper function for building an unfolded architecture.

- Parameters:

iteration (str, deepinv.optim.OptimIterator) – either the name of the algorithm to be used, or directly an optim iterator. If an algorithm name (string), should be either

"GD"(gradient descent),"PGD"(proximal gradient descent),"ADMM"(ADMM),"HQS"(half-quadratic splitting),"CP"(Chambolle-Pock) or"DRS"(Douglas Rachford). See <optim> for more details.params_algo (dict) – dictionary containing all the relevant parameters for running the algorithm, e.g. the stepsize, regularisation parameter, denoising standard deviation. Each value of the dictionary can be either Iterable (distinct value for each iteration) or a single float (same value for each iteration). Default:

{"stepsize": 1.0, "lambda": 1.0}. See Parameters for more details.deepinv.optim.DataFidelity (list,) – data-fidelity term. Either a single instance (same data-fidelity for each iteration) or a list of instances of

deepinv.optim.DataFidelity()(distinct data-fidelity for each iteration). Default:None.prior (list, deepinv.optim.Prior) – regularization prior. Either a single instance (same prior for each iteration - weight tied) or a list of instances of deepinv.optim.Prior (distinct prior for each iteration - weight untied). Default:

None.max_iter (int) – number of iterations of the unfolded algorithm. Default: 5.

trainable_params (list) – List of parameters to be trained. Each parameter should be a key of the

params_algodictionary for thedeepinv.optim.OptimIteratorclass. This does not encompass the trainable weights of the prior module.F_fn (callable) – Custom user input cost function. default: None.

device (torch.device) – Device on which to perform the computations. Default:

torch.device("cpu").g_first (bool) – whether to perform the step on \(g\) before that on \(f\) before or not. default: False

bregman_potential (deepinv.optim.Bregman) – Bregman potential used for Bregman optimization algorithms such as Mirror Descent. Default:

None, comes back to standart Euclidean optimization.kwargs – additional arguments to be passed to the

BaseOptim()class.

- Returns:

an unfolded architecture (instance of

BaseUnfold()).

- Example:

>>> import torch >>> import deepinv as dinv >>> >>> # Create a trainable unfolded architecture >>> model = dinv.unfolded.unfolded_builder( ... iteration="PGD", ... data_fidelity=dinv.optim.data_fidelity.L2(), ... prior=dinv.optim.PnP(dinv.models.DnCNN(in_channels=1, out_channels=1)), ... params_algo={"stepsize": 1.0, "g_param": 1.0}, ... trainable_params=["stepsize", "g_param"] ... ) >>> # Forward pass >>> x = torch.randn(1, 1, 16, 16) >>> physics = dinv.physics.Denoising() >>> y = physics(x) >>> x_hat = model(y, physics)

Examples using unfolded_builder:

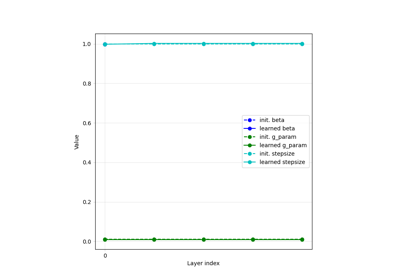

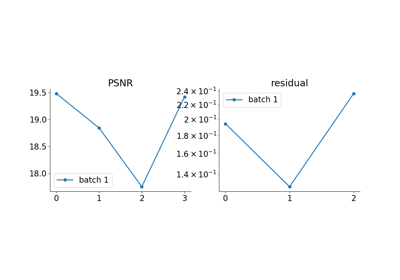

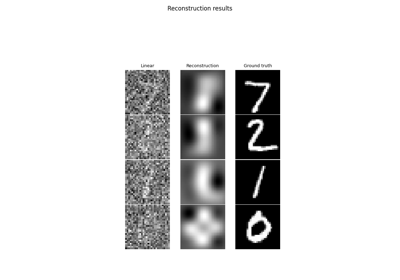

Learned Iterative Soft-Thresholding Algorithm (LISTA) for compressed sensing

Unfolded Chambolle-Pock for constrained image inpainting