Note

New to DeepInverse? Get started with the basics with the 5 minute quickstart tutorial..

Unfolded Chambolle-Pock for constrained image inpainting#

Image inpainting consists in solving \(y = Ax\) where \(A\) is a mask operator. This problem can be reformulated as the following minimization problem:

where \(\iota_{\mathcal{B}_2(y, r)}\) is the indicator function of the ball of radius \(r\) centered at \(y\) for the \(\ell_2\) norm, and \(\regname\) is a regularisation. Recall that the indicator function of a convex set \(\mathcal{C}\) is defined as \(\iota_{\mathcal{C}}(x) = 0\) if \(x \in \mathcal{C}\) and \(\iota_{\mathcal{C}}(x) = +\infty\) otherwise.

In this example, we unfold the Chambolle-Pock algorithm to solve this problem, and learn the thresholding parameters of a wavelet denoiser in a LISTA fashion.

from pathlib import Path

import torch

from torch.utils.data import DataLoader

from torchvision import transforms

import deepinv as dinv

from deepinv.utils import load_dataset

from deepinv.optim.data_fidelity import IndicatorL2

from deepinv.optim.prior import PnP

from deepinv.optim import PDCP

Setup paths for data loading and results.#

Selected GPU 0 with 9405.25 MiB free memory

Load base image datasets and degradation operators.#

In this example, we use the CBSD68 dataset for training and the set3c dataset for testing. We work with images of size 32x32 if no GPU is available, else 128x128.

operation = "inpainting"

train_dataset_name = "CBSD68"

test_dataset_name = "set3c"

img_size = 128 if torch.cuda.is_available() else 32

test_transform = transforms.Compose(

[transforms.CenterCrop(img_size), transforms.ToTensor()]

)

train_transform = transforms.Compose(

[transforms.RandomCrop(img_size), transforms.ToTensor()]

)

train_base_dataset = load_dataset(train_dataset_name, transform=train_transform)

test_base_dataset = load_dataset(test_dataset_name, transform=test_transform)

Downloading datasets/CBSD68.zip

0%| | 0.00/19.8M [00:00<?, ?iB/s]

44%|████▍ | 8.77M/19.8M [00:00<00:00, 87.7MiB/s]

100%|██████████| 19.8M/19.8M [00:00<00:00, 105MiB/s]

CBSD68 dataset downloaded in datasets

Define forward operator and generate dataset#

We define an inpainting operator that randomly masks pixels with probability 0.5.

A dataset of pairs of measurements and ground truth images is then generated using the

deepinv.datasets.generate_dataset() function.

Once the dataset is generated, we can load it using the deepinv.datasets.HDF5Dataset class.

n_channels = 3 # 3 for color images, 1 for gray-scale images

probability_mask = 0.5 # probability to mask pixel

# Generate inpainting operator

physics = dinv.physics.Inpainting(

img_size=(n_channels, img_size, img_size), mask=probability_mask, device=device

)

# Use parallel dataloader if using a GPU to speed up training,

# otherwise, as all computes are on CPU, use synchronous data loading.

num_workers = 4 if torch.cuda.is_available() else 0

n_images_max = (

100 if torch.cuda.is_available() else 50

) # maximal number of images used for training

my_dataset_name = "demo_training_inpainting"

measurement_dir = DATA_DIR / train_dataset_name / operation

deepinv_datasets_path = dinv.datasets.generate_dataset(

train_dataset=train_base_dataset,

test_dataset=test_base_dataset,

physics=physics,

device=device,

save_dir=measurement_dir,

train_datapoints=n_images_max,

num_workers=num_workers,

dataset_filename=str(my_dataset_name),

)

train_dataset = dinv.datasets.HDF5Dataset(path=deepinv_datasets_path, train=True)

test_dataset = dinv.datasets.HDF5Dataset(path=deepinv_datasets_path, train=False)

train_batch_size = 32 if torch.cuda.is_available() else 3

test_batch_size = 32 if torch.cuda.is_available() else 3

train_dataloader = DataLoader(

train_dataset, batch_size=train_batch_size, num_workers=num_workers, shuffle=True

)

test_dataloader = DataLoader(

test_dataset, batch_size=test_batch_size, num_workers=num_workers, shuffle=False

)

Dataset has been saved at measurements/CBSD68/inpainting/demo_training_inpainting0.h5

Set up the reconstruction network#

We unfold the Chambolle-Pock algorithm as follows:

\[\begin{split}\begin{equation*} \begin{aligned} u_{k+1} &= \operatorname{prox}_{\sigma d^*}(u_k + \sigma A z_k) \\ x_{k+1} &= \operatorname{D_{\sigma}}(x_k-\tau A^\top u_{k+1}) \\ z_{k+1} &= 2x_{k+1} -x_k \\ \end{aligned} \end{equation*}\end{split}\]

where \(\operatorname{D_{\sigma}}\) is a wavelet denoiser with thresholding parameters \(\sigma\).

The learnable parameters of our network are \(\tau\) and \(\sigma\).

# Select the data fidelity term

data_fidelity = IndicatorL2(radius=0.0)

# Set up the trainable denoising prior; here, the soft-threshold in a wavelet basis.

# If the prior is initialized with a list of length max_iter,

# then a distinct weight is trained for each CP iteration.

# For fixed trained model prior across iterations, initialize with a single model.

max_iter = 30 if torch.cuda.is_available() else 20 # Number of unrolled iterations

level = 3

prior = [

PnP(denoiser=dinv.models.WaveletDenoiser(wv="db8", level=level, device=device))

for i in range(max_iter)

]

# Unrolled optimization algorithm parameters

stepsize = [

1.0

] * max_iter # initialization of the stepsizes. A distinct stepsize is trained for each iteration.

sigma_denoiser = [

0.01 * torch.ones(1, level, 3)

] * max_iter # thresholding parameter of the wavelet denoiser.

stepsize_dual = 1.0 # dual stepsize for Chambolle-Pock

# define which parameters are trainable : here, both the regularization parameters and the primal/dual stepsizes are learned.

trainable_params = ["sigma_denoiser", "stepsize", "stepsize_dual"]

# Define the unfolded trainable model. # See the documentation of the Primal Dual CP algorithm :class:`deepinv.optim.PDCP` for more details.

model = PDCP(

stepsize=stepsize,

sigma_denoiser=sigma_denoiser,

stepsize_dual=stepsize_dual,

K=physics.A,

K_adjoint=physics.A_adjoint,

trainable_params=trainable_params,

data_fidelity=data_fidelity,

max_iter=max_iter,

prior=prior,

unfold=True,

)

Train the model#

We train the model using the deepinv.Trainer class.

We perform supervised learning and use the mean squared error as loss function. This can be easily done using the

deepinv.loss.SupLoss class.

Note

In this example, we only train for a few epochs to keep the training time short on CPU. For a good reconstruction quality, we recommend to train for at least 50 epochs.

epochs = 10 if torch.cuda.is_available() else 5 # choose training epochs

learning_rate = 1e-3

verbose = True # print training information

# choose training losses

losses = dinv.loss.SupLoss(metric=dinv.metric.MSE())

# choose optimizer and scheduler

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, weight_decay=1e-8)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=int(epochs * 0.8))

trainer = dinv.Trainer(

model=model,

scheduler=scheduler,

losses=losses,

device=device,

optimizer=optimizer,

physics=physics,

train_dataloader=train_dataloader,

save_path=str(CKPT_DIR / operation),

verbose=verbose,

show_progress_bar=False, # disable progress bar for better vis in sphinx gallery.

epochs=epochs,

)

model = trainer.train()

/local/jtachell/deepinv/deepinv/deepinv/training/trainer.py:1354: UserWarning: non_blocking_transfers=True but DataLoader.pin_memory=False; set pin_memory=True to overlap host-device copies with compute.

self.setup_train()

The model has 301 trainable parameters

Train epoch 0: TotalLoss=0.021, PSNR=20.469

Train epoch 1: TotalLoss=0.004, PSNR=26.057

Train epoch 2: TotalLoss=0.003, PSNR=26.273

Train epoch 3: TotalLoss=0.003, PSNR=26.421

Train epoch 4: TotalLoss=0.003, PSNR=26.543

Train epoch 5: TotalLoss=0.003, PSNR=26.645

Train epoch 6: TotalLoss=0.003, PSNR=26.73

Train epoch 7: TotalLoss=0.003, PSNR=26.803

Train epoch 8: TotalLoss=0.003, PSNR=26.854

Train epoch 9: TotalLoss=0.003, PSNR=26.86

Test the network#

We can now test the trained network using the deepinv.test() function.

The testing function will compute test_psnr metrics and plot and save the results.

plot_images = True

method = "artifact_removal"

trainer.test(test_dataloader)

/local/jtachell/deepinv/deepinv/deepinv/training/trainer.py:1546: UserWarning: non_blocking_transfers=True but DataLoader.pin_memory=False; set pin_memory=True to overlap host-device copies with compute.

self.setup_train(train=False)

Eval epoch 0: PSNR=24.595, PSNR no learning=6.654

Test results:

PSNR no learning: 6.654 +- 0.894

PSNR: 24.595 +- 0.321

{'PSNR no learning': 6.6535491943359375, 'PSNR no learning_std': 0.8941914593348746, 'PSNR': 24.594510396321613, 'PSNR_std': 0.3212191426797495}

Saving the model#

We can save the trained model following the standard PyTorch procedure.

# Save the model

torch.save(model.state_dict(), CKPT_DIR / operation / "model.pth")

Loading the model#

Similarly, we can load our trained unfolded architecture following the standard PyTorch procedure. To check that the loading is performed correctly, we use new variables for the initialization of the model.

# Set up the trainable denoising prior; here, the soft-threshold in a wavelet basis.

level = 3

model_spec = {

"name": "waveletprior",

"args": {"wv": "db8", "level": level, "device": device},

}

# If the prior is initialized with a list of length max_iter,

# then a distinct weight is trained for each PGD iteration.

# For fixed trained model prior across iterations, initialize with a single model.

max_iter = 30 if torch.cuda.is_available() else 20 # Number of unrolled iterations

prior_new = [

PnP(denoiser=dinv.models.WaveletDenoiser(wv="db8", level=level, device=device))

for i in range(max_iter)

]

# Unrolled optimization algorithm parameters

stepsize = [

1.0

] * max_iter # initialization of the stepsizes. A distinct stepsize is trained for each iteration.

sigma_denoiser = [0.01 * torch.ones(1, level, 3)] * max_iter

stepsize_dual = 1.0 # stepsize for Chambolle-Pock

model_new = PDCP(

unfold=True,

stepsize=stepsize,

sigma_denoiser=sigma_denoiser,

stepsize_dual=stepsize_dual,

K=physics.A,

K_adjoint=physics.A_adjoint,

trainable_params=trainable_params,

data_fidelity=data_fidelity,

max_iter=max_iter,

prior=prior_new,

g_first=False,

)

model_new.load_state_dict(torch.load(CKPT_DIR / operation / "model.pth"))

model_new.eval()

# Test the model and check that the results are the same as before saving.

dinv.training.test(

model_new, test_dataloader, physics=physics, device=device, show_progress_bar=False

)

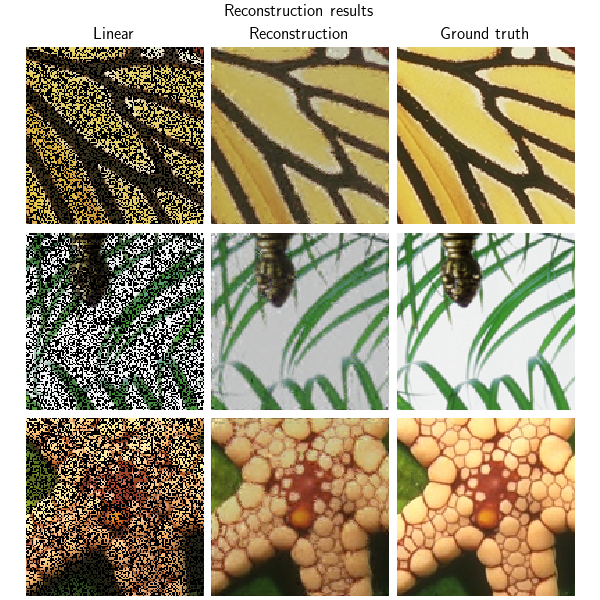

# Plot the results

test_sample, _ = next(iter(test_dataloader))

model.eval()

test_sample = test_sample.to(device)

# Get the measurements and the ground truth

y = physics(test_sample)

with torch.no_grad():

rec = model(y, physics=physics)

backprojected = physics.A_adjoint(y)

dinv.utils.plot(

[backprojected, rec, test_sample],

titles=["Linear", "Reconstruction", "Ground truth"],

suptitle="Reconstruction results",

)

Eval epoch 0: PSNR=24.595, PSNR no learning=6.654

Test results:

PSNR no learning: 6.654 +- 0.894

PSNR: 24.595 +- 0.321

/local/jtachell/deepinv/deepinv/deepinv/utils/plotting.py:408: UserWarning: This figure was using a layout engine that is incompatible with subplots_adjust and/or tight_layout; not calling subplots_adjust.

fig.subplots_adjust(top=0.75)

Total running time of the script: (0 minutes 41.818 seconds)