Note

New to DeepInverse? Get started with the basics with the 5 minute quickstart tutorial..

Vanilla Unfolded algorithm for super-resolution#

This is a simple example to show how to use vanilla unfolded Plug-and-Play. The DnCNN denoiser and the algorithm parameters (stepsize, regularization parameters) are trained jointly. For simplicity, we show how to train the algorithm on a small dataset. For optimal results, use a larger dataset.

import deepinv as dinv

import torch

from deepinv.models.utils import get_weights_url

from torch.utils.data import DataLoader

from deepinv.optim.data_fidelity import L2

from deepinv.optim.prior import PnP

from deepinv.optim import DRS

from torchvision import transforms

from deepinv.utils import get_data_home

from deepinv.datasets import BSDS500

Setup paths for data loading and results.#

BASE_DIR = get_data_home()

DATA_DIR = BASE_DIR / "measurements"

RESULTS_DIR = BASE_DIR / "results"

CKPT_DIR = BASE_DIR / "ckpts"

# Set the global random seed from pytorch to ensure reproducibility of the example.

torch.manual_seed(0)

device = dinv.utils.get_device()

Selected GPU 0 with 5035.125 MiB free memory

Load base image datasets and degradation operators.#

In this example, we use the CBSD500 dataset for training and the Set3C dataset for testing.

img_size = 64 if torch.cuda.is_available() else 32

n_channels = 3 # 3 for color images, 1 for gray-scale images

operation = "super-resolution"

Generate a dataset of low resolution images and load it.#

We use the Downsampling class from the physics module to generate a dataset of low resolution images.

# For simplicity, we use a small dataset for training.

# To be replaced for optimal results. For example, you can use the larger DIV2K or LSDIR datasets (also provided in the library).

# Specify the train and test transforms to be applied to the input images.

test_transform = transforms.Compose(

[transforms.CenterCrop(img_size), transforms.ToTensor()]

)

train_transform = transforms.Compose(

[transforms.RandomCrop(img_size), transforms.ToTensor()]

)

# Define the base train and test datasets of clean images.

train_base_dataset = BSDS500(

BASE_DIR, download=True, train=True, transform=train_transform

)

test_base_dataset = BSDS500(

BASE_DIR, download=False, train=False, transform=test_transform

)

# Use parallel dataloader if using a GPU to speed up training, otherwise, as all computes are on CPU, use synchronous

# dataloading.

num_workers = 4 if torch.cuda.is_available() else 0

# Degradation parameters

factor = 2

noise_level_img = 0.03

# Generate the gaussian blur downsampling operator.

physics = dinv.physics.Downsampling(

filter="gaussian",

img_size=(n_channels, img_size, img_size),

factor=factor,

device=device,

noise_model=dinv.physics.GaussianNoise(sigma=noise_level_img),

)

my_dataset_name = "demo_unfolded_sr"

n_images_max = (

None if torch.cuda.is_available() else 10

) # max number of images used for training (use all if you have a GPU)

measurement_dir = DATA_DIR / "BSDS500" / operation

generated_datasets_path = dinv.datasets.generate_dataset(

train_dataset=train_base_dataset,

test_dataset=test_base_dataset,

physics=physics,

device=device,

save_dir=measurement_dir,

train_datapoints=n_images_max,

num_workers=num_workers,

dataset_filename=str(my_dataset_name),

)

train_dataset = dinv.datasets.HDF5Dataset(path=generated_datasets_path, train=True)

test_dataset = dinv.datasets.HDF5Dataset(path=generated_datasets_path, train=False)

0it [00:00, ?it/s]

2.56MB [00:00, 26.8MB/s]

5.12MB [00:00, 26.2MB/s]

7.69MB [00:00, 25.7MB/s]

10.2MB [00:00, 26.0MB/s]

12.8MB [00:00, 25.6MB/s]

15.3MB [00:00, 25.7MB/s]

17.9MB [00:00, 25.9MB/s]

20.4MB [00:00, 25.7MB/s]

23.6MB [00:00, 28.0MB/s]

26.5MB [00:01, 28.8MB/s]

29.3MB [00:01, 28.6MB/s]

32.6MB [00:01, 30.1MB/s]

35.4MB [00:01, 29.5MB/s]

38.4MB [00:01, 29.8MB/s]

41.6MB [00:01, 30.8MB/s]

44.8MB [00:01, 31.3MB/s]

47.8MB [00:01, 31.0MB/s]

50.8MB [00:01, 30.5MB/s]

53.9MB [00:01, 31.0MB/s]

56.9MB [00:02, 28.5MB/s]

59.7MB [00:02, 24.0MB/s]

62.1MB [00:02, 21.6MB/s]

64.3MB [00:02, 20.1MB/s]

66.3MB [00:02, 19.5MB/s]

68.2MB [00:02, 18.4MB/s]

70.1MB [00:02, 17.9MB/s]

71.8MB [00:03, 16.7MB/s]

73.6MB [00:03, 17.1MB/s]

75.4MB [00:03, 17.4MB/s]

77.1MB [00:03, 17.2MB/s]

78.8MB [00:03, 17.0MB/s]

80.4MB [00:03, 17.1MB/s]

82.1MB [00:03, 16.8MB/s]

83.8MB [00:03, 16.9MB/s]

85.4MB [00:03, 16.7MB/s]

87.2MB [00:03, 17.0MB/s]

88.9MB [00:04, 17.1MB/s]

90.6MB [00:04, 16.9MB/s]

92.2MB [00:04, 16.9MB/s]

93.9MB [00:04, 16.8MB/s]

95.6MB [00:04, 16.8MB/s]

97.2MB [00:04, 17.0MB/s]

99.0MB [00:04, 17.1MB/s]

101MB [00:04, 17.0MB/s]

102MB [00:04, 17.2MB/s]

104MB [00:05, 17.0MB/s]

106MB [00:05, 16.7MB/s]

107MB [00:05, 17.0MB/s]

109MB [00:05, 16.8MB/s]

111MB [00:05, 16.8MB/s]

112MB [00:05, 17.2MB/s]

114MB [00:05, 16.9MB/s]

116MB [00:05, 17.0MB/s]

118MB [00:05, 16.9MB/s]

119MB [00:05, 15.8MB/s]

121MB [00:06, 15.4MB/s]

122MB [00:06, 15.9MB/s]

124MB [00:06, 16.8MB/s]

126MB [00:06, 16.6MB/s]

128MB [00:06, 16.6MB/s]

129MB [00:06, 16.9MB/s]

131MB [00:06, 16.9MB/s]

133MB [00:06, 17.8MB/s]

135MB [00:07, 9.16MB/s]

139MB [00:07, 15.9MB/s]

142MB [00:07, 18.6MB/s]

144MB [00:07, 19.8MB/s]

148MB [00:07, 24.9MB/s]

152MB [00:07, 27.8MB/s]

155MB [00:08, 19.0MB/s]

157MB [00:08, 13.3MB/s]

159MB [00:08, 11.7MB/s]

160MB [00:08, 18.9MB/s]

Extracting: 0%| | 0/2492 [00:00<?, ?it/s]

Extracting: 4%|▍ | 101/2492 [00:00<00:02, 1009.49it/s]

Extracting: 8%|▊ | 202/2492 [00:00<00:02, 970.66it/s]

Extracting: 12%|█▏ | 301/2492 [00:00<00:02, 978.99it/s]

Extracting: 16%|█▌ | 399/2492 [00:00<00:02, 936.68it/s]

Extracting: 20%|█▉ | 497/2492 [00:00<00:02, 949.42it/s]

Extracting: 24%|██▍ | 606/2492 [00:00<00:01, 994.30it/s]

Extracting: 29%|██▊ | 712/2492 [00:00<00:01, 1014.31it/s]

Extracting: 33%|███▎ | 822/2492 [00:00<00:01, 1041.14it/s]

Extracting: 37%|███▋ | 932/2492 [00:00<00:01, 1057.62it/s]

Extracting: 42%|████▏ | 1038/2492 [00:01<00:01, 851.34it/s]

Extracting: 45%|████▌ | 1130/2492 [00:01<00:02, 478.69it/s]

Extracting: 48%|████▊ | 1201/2492 [00:01<00:03, 372.07it/s]

Extracting: 50%|█████ | 1257/2492 [00:02<00:03, 338.66it/s]

Extracting: 52%|█████▏ | 1304/2492 [00:02<00:03, 304.41it/s]

Extracting: 54%|█████▍ | 1343/2492 [00:02<00:04, 284.45it/s]

Extracting: 55%|█████▌ | 1377/2492 [00:02<00:04, 264.34it/s]

Extracting: 56%|█████▋ | 1407/2492 [00:02<00:04, 253.63it/s]

Extracting: 58%|█████▊ | 1435/2492 [00:02<00:04, 247.51it/s]

Extracting: 59%|█████▊ | 1461/2492 [00:03<00:04, 241.18it/s]

Extracting: 60%|█████▉ | 1486/2492 [00:03<00:04, 235.64it/s]

Extracting: 61%|██████ | 1510/2492 [00:03<00:04, 227.34it/s]

Extracting: 62%|██████▏ | 1552/2492 [00:03<00:03, 274.24it/s]

Extracting: 66%|██████▌ | 1639/2492 [00:03<00:02, 369.70it/s]

Extracting: 67%|██████▋ | 1676/2492 [00:03<00:02, 308.65it/s]

Extracting: 69%|██████▊ | 1708/2492 [00:03<00:03, 243.21it/s]

Extracting: 94%|█████████▎| 2335/2492 [00:04<00:00, 1447.44it/s]

Extracting: 100%|██████████| 2492/2492 [00:04<00:00, 618.06it/s]

Dataset has been saved at datasets/measurements/BSDS500/super-resolution/demo_unfolded_sr0.h5

Define the unfolded PnP algorithm.#

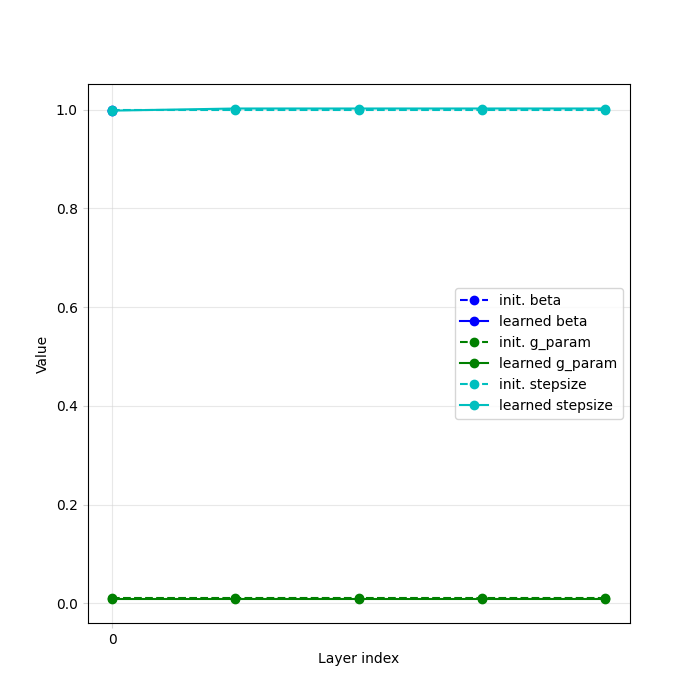

The chosen algorithm is here DRS (Douglas-Rachford Splitting). Note that if the prior (resp. a parameter) is initialized with a list of length max_iter, then a distinct model (resp. parameter) is trained for each iteration. For fixed trained model prior (resp. parameter) across iterations, initialize with a single element.

# Unrolled optimization algorithm parameters

max_iter = 5 # number of unfolded layers

# Select the data fidelity term

data_fidelity = L2()

# Set up the trainable denoising prior

# Here the prior model is common for all iterations

prior = PnP(denoiser=dinv.models.DnCNN(depth=20, pretrained="download").to(device))

# The parameters are initialized with a list of length max_iter, so that a distinct parameter is trained for each iteration.

stepsize = [1.0] * max_iter # stepsize of the algorithm

sigma_denoiser = [

1.0

] * max_iter # noise level parameter of the denoiser (not used by DnCNN)

beta = 1.0 # relaxation parameter of the Douglas-Rachford splitting

trainable_params = [

"stepsize",

"beta",

"sigma_denoiser",

] # define which parameters are trainable

# Logging parameters

verbose = True

# Define the unfolded trainable model.

model = DRS(

stepsize=stepsize,

sigma_denoiser=sigma_denoiser,

beta=beta,

trainable_params=trainable_params,

data_fidelity=data_fidelity,

max_iter=max_iter,

prior=prior,

unfold=True,

)

Define the training parameters.#

We use the Adam optimizer and the StepLR scheduler.

# training parameters

epochs = 5 if torch.cuda.is_available() else 1

learning_rate = 5e-4

train_batch_size = 32 if torch.cuda.is_available() else 1

test_batch_size = 3

# choose optimizer and scheduler

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, weight_decay=1e-8)

# If working on CPU, start with a pretrained model to reduce training time

if not torch.cuda.is_available():

file_name = "demo_vanilla_unfolded.pth"

url = get_weights_url(model_name="demo", file_name=file_name)

ckpt = torch.hub.load_state_dict_from_url(

url, map_location=lambda storage, loc: storage, file_name=file_name

)

model.load_state_dict(ckpt["state_dict"])

optimizer.load_state_dict(ckpt["optimizer"])

# choose supervised training loss

losses = [dinv.loss.SupLoss(metric=dinv.metric.MSE())]

train_dataloader = DataLoader(

train_dataset, batch_size=train_batch_size, num_workers=num_workers, shuffle=True

)

test_dataloader = DataLoader(

test_dataset, batch_size=test_batch_size, num_workers=num_workers, shuffle=False

)

Train the network#

We train the network using the deepinv.Trainer class.

trainer = dinv.Trainer(

model,

physics=physics,

train_dataloader=train_dataloader,

eval_dataloader=test_dataloader,

epochs=epochs,

losses=losses,

optimizer=optimizer,

device=device,

early_stop=True, # set to None to disable early stopping

save_path=str(CKPT_DIR / operation),

verbose=verbose,

show_progress_bar=False, # disable progress bar for better vis in sphinx gallery.

)

model = trainer.train()

/local/jtachell/deepinv/deepinv/deepinv/training/trainer.py:1354: UserWarning: non_blocking_transfers=True but DataLoader.pin_memory=False; set pin_memory=True to overlap host-device copies with compute.

self.setup_train()

The model has 668238 trainable parameters

/local/jtachell/deepinv/deepinv/deepinv/training/trainer.py:521: UserWarning: early_stop should be an integer or None. Setting early_stop=3. This behaviour will be deprecated in future versions.

warnings.warn(

Train epoch 0: TotalLoss=0.009, PSNR=21.591

Eval epoch 0: PSNR=20.581

Best model saved at epoch 1

Train epoch 1: TotalLoss=0.007, PSNR=23.006

Eval epoch 1: PSNR=21.237

Best model saved at epoch 2

Train epoch 2: TotalLoss=0.007, PSNR=23.412

Eval epoch 2: PSNR=21.421

Best model saved at epoch 3

Train epoch 3: TotalLoss=0.006, PSNR=23.779

Eval epoch 3: PSNR=21.717

Best model saved at epoch 4

Train epoch 4: TotalLoss=0.006, PSNR=24.052

Eval epoch 4: PSNR=21.854

Best model saved at epoch 5

Test the network#

trainer.test(test_dataloader)

test_sample, _ = next(iter(test_dataloader))

model.eval()

test_sample = test_sample.to(device)

# Get the measurements and the ground truth

y = physics(test_sample)

with torch.no_grad():

rec = model(y, physics=physics)

backprojected = physics.A_adjoint(y)

dinv.utils.plot(

[backprojected, rec, test_sample],

titles=["Linear", "Reconstruction", "Ground truth"],

suptitle="Reconstruction results",

)

/local/jtachell/deepinv/deepinv/deepinv/training/trainer.py:1546: UserWarning: non_blocking_transfers=True but DataLoader.pin_memory=False; set pin_memory=True to overlap host-device copies with compute.

self.setup_train(train=False)

Eval epoch 0: PSNR=21.854, PSNR no learning=9.122

Test results:

PSNR no learning: 9.122 +- 2.903

PSNR: 21.854 +- 3.672

/local/jtachell/deepinv/deepinv/deepinv/utils/plotting.py:408: UserWarning: This figure was using a layout engine that is incompatible with subplots_adjust and/or tight_layout; not calling subplots_adjust.

fig.subplots_adjust(top=0.75)

Total running time of the script: (0 minutes 50.187 seconds)