L2

- class deepinv.optim.L2(sigma=1.0)[source]

Bases:

DataFidelityImplementation of \(\distancename\) as the normalized \(\ell_2\) norm

\[f(x) = \frac{1}{2\sigma^2}\|\forw{x}-y\|^2\]It can be used to define a log-likelihood function associated with additive Gaussian noise by setting an appropriate noise level \(\sigma\).

- Parameters:

sigma (float) – Standard deviation of the noise to be used as a normalisation factor.

>>> import torch >>> import deepinv as dinv >>> # define a loss function >>> fidelity = dinv.optim.L2() >>> >>> x = torch.ones(1, 1, 3, 3) >>> mask = torch.ones_like(x) >>> mask[0, 0, 1, 1] = 0 >>> physics = dinv.physics.Inpainting(tensor_size=(1, 3, 3), mask=mask) >>> y = physics(x) >>> >>> # Compute the data fidelity f(Ax, y) >>> fidelity(x, y, physics) tensor([0.]) >>> # Compute the gradient of f >>> fidelity.grad(x, y, physics) tensor([[[[0., 0., 0.], [0., 0., 0.], [0., 0., 0.]]]]) >>> # Compute the proximity operator of f >>> fidelity.prox(x, y, physics, gamma=1.0) tensor([[[[1., 1., 1.], [1., 1., 1.], [1., 1., 1.]]]])

- d(u, y)[source]

Computes the data fidelity distance \(\datafid{u}{y}\), i.e.

\[\datafid{u}{y} = \frac{1}{2\sigma^2}\|u-y\|^2\]- Parameters:

u (torch.Tensor) – Variable \(u\) at which the data fidelity is computed.

y (torch.Tensor) – Data \(y\).

- Returns:

(torch.Tensor) data fidelity \(\datafid{u}{y}\) of size B with B the size of the batch.

- grad_d(u, y)[source]

Computes the gradient of \(\distancename\), that is \(\nabla_{u}\distance{u}{y}\), i.e.

\[\nabla_{u}\distance{u}{y} = \frac{1}{\sigma^2}(u-y)\]- Parameters:

u (torch.Tensor) – Variable \(u\) at which the gradient is computed.

y (torch.Tensor) – Data \(y\).

- Returns:

(torch.Tensor) gradient of the distance function \(\nabla_{u}\distance{u}{y}\).

- prox(x, y, physics, gamma=1.0)[source]

Proximal operator of \(\gamma \datafid{Ax}{y} = \frac{\gamma}{2\sigma^2}\|Ax-y\|^2\).

Computes \(\operatorname{prox}_{\gamma \datafidname}\), i.e.

\[\operatorname{prox}_{\gamma \datafidname} = \underset{u}{\text{argmin}} \frac{\gamma}{2\sigma^2}\|Au-y\|_2^2+\frac{1}{2}\|u-x\|_2^2\]- Parameters:

x (torch.Tensor) – Variable \(x\) at which the proximity operator is computed.

y (torch.Tensor) – Data \(y\).

physics (deepinv.physics.Physics) – physics model.

gamma (float) – stepsize of the proximity operator.

- Returns:

(torch.Tensor) proximity operator \(\operatorname{prox}_{\gamma \datafidname}(x)\).

- prox_d(x, y, gamma=1.0)[source]

Proximal operator of \(\gamma \distance{x}{y} = \frac{\gamma}{2\sigma^2}\|x-y\|^2\).

Computes \(\operatorname{prox}_{\gamma \distancename}\), i.e.

\[\operatorname{prox}_{\gamma \distancename} = \underset{u}{\text{argmin}} \frac{\gamma}{2\sigma^2}\|u-y\|_2^2+\frac{1}{2}\|u-x\|_2^2\]- Parameters:

x (torch.Tensor) – Variable \(x\) at which the proximity operator is computed.

y (torch.Tensor) – Data \(y\).

gamma (float) – thresholding parameter.

- Returns:

(torch.Tensor) proximity operator \(\operatorname{prox}_{\gamma \distancename}(x)\).

Examples using L2:

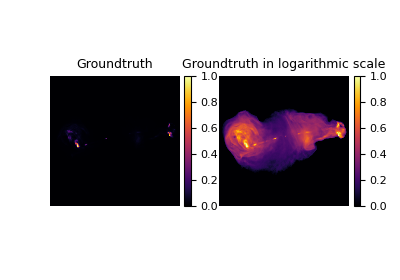

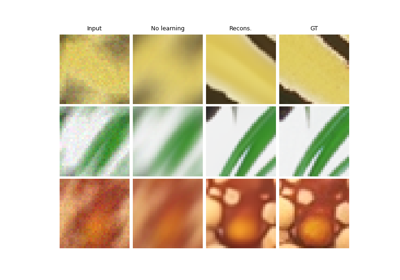

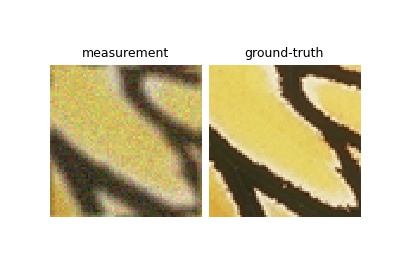

Random phase retrieval and reconstruction methods.

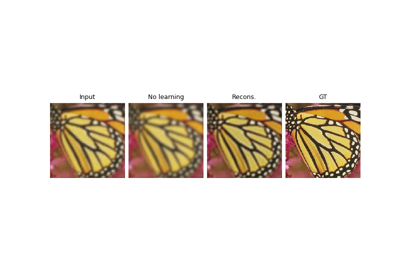

Regularization by Denoising (RED) for Super-Resolution.

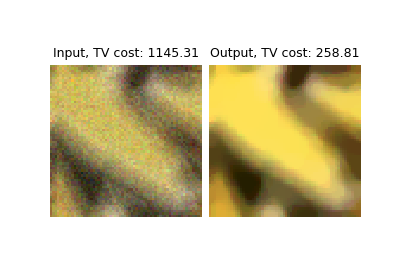

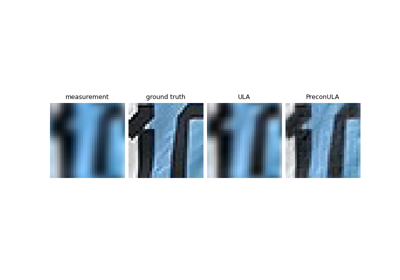

PnP with custom optimization algorithm (Condat-Vu Primal-Dual)

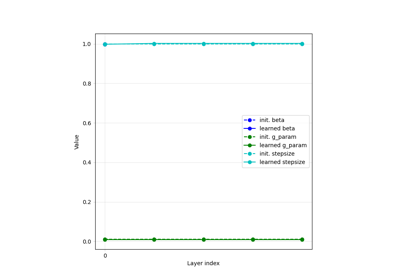

Learned Iterative Soft-Thresholding Algorithm (LISTA) for compressed sensing

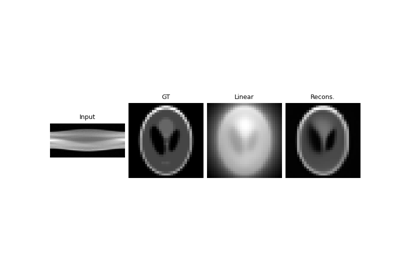

Deep Equilibrium (DEQ) algorithms for image deblurring