Note

New to DeepInverse? Get started with the basics with the 5 minute quickstart tutorial..

Implementing DPS#

In this tutorial, we will go over the steps in the Diffusion Posterior Sampling (DPS) algorithm introduced in

Chung et al.[1]. The full algorithm is implemented in deepinv.sampling.DPS.

Installing dependencies#

Let us import the relevant packages, and load a sample

image of size 64 x 64. This will be used as our ground truth image.

Note

We work with an image of size 64 x 64 to reduce the computational time of this example. The DiffUNet we use in the algorithm works best with images of size 256 x 256.

import torch

import deepinv as dinv

from deepinv.utils.plotting import plot

from deepinv.optim.data_fidelity import L2

from deepinv.utils import load_example

from tqdm import tqdm # to visualize progress

device = dinv.utils.get_device()

x_true = load_example("butterfly.png", img_size=64).to(device)

x = x_true.clone()

Selected GPU 0 with 1991.125 MiB free memory

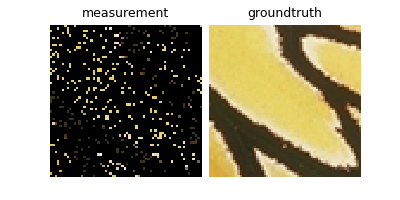

In this tutorial we consider random inpainting as the inverse problem, where the forward operator is implemented

in deepinv.physics.Inpainting. In the example that we use, 90% of the pixels will be masked out randomly,

and we will additionally have Additive White Gaussian Noise (AWGN) of standard deviation 12.75/255.

Diffusion model loading#

We will take a pre-trained diffusion model that was also used for the DiffPIR algorithm, namely the one trained on

the FFHQ 256x256 dataset. Note that this means that the diffusion model was trained with human face images,

which is very different from the image that we consider in our example. Nevertheless, we will see later on that

DPS generalizes sufficiently well even in such case.

model = dinv.models.DiffUNet(large_model=False).to(device)

Define diffusion schedule#

We will use the standard linear diffusion noise schedule. Once \(\beta_t\) is defined to follow a linear schedule that interpolates between \(\beta_{\rm min}\) and \(\beta_{\rm max}\), we have the following additional definitions: \(\alpha_t := 1 - \beta_t\), \(\bar\alpha_t := \prod_{j=1}^t \alpha_j\). The following equations will also be useful later on (we always assume that \(\mathbf{\epsilon} \sim \mathcal{N}(\mathbf{0}, \mathbf{I})\) hereafter.)

where we use the reparametrization trick.

num_train_timesteps = 1000 # Number of timesteps used during training

betas = torch.linspace(1e-4, 2e-2, num_train_timesteps).to(device)

alphas = (1 - betas).cumprod(dim=0)

The DPS algorithm#

Now that the inverse problem is defined, we can apply the DPS algorithm to solve it. The DPS algorithm is a diffusion algorithm that alternates between a denoising step, a gradient step and a reverse diffusion sampling step. The algorithm writes as follows, for \(t\) decreasing from \(T\) to \(1\):

where \(\denoiser{\cdot}{\sigma}\) is a denoising network for noise level \(\sigma\), \(\eta\) is a hyperparameter in [0, 1], and the constants \(\tilde{\sigma}_t, a_t, b_t\) are defined as

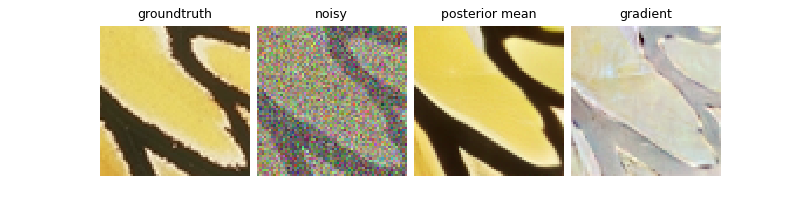

Denoising step#

The first step of DPS consists of applying a denoiser function to the current image \(\mathbf{x}_t\), with standard deviation \(\sigma_t = \sqrt{1 - \overline{\alpha}_t}/\sqrt{\overline{\alpha}_t}\).

This is equivalent to sampling \(\mathbf{x}_t \sim q(\mathbf{x}_t|\mathbf{x}_0)\), and then computing the posterior mean.

DPS approximation#

In order to perform gradient-based posterior sampling with diffusion models, we have to be able to compute \(\nabla_{\mathbf{x}_t} \log p(\mathbf{x}_t|\mathbf{y})\). Applying Bayes rule, we have

For the former term, we can simply plug-in our estimated score function as in Tweedie’s formula. As the latter term is intractable, DPS proposes the following approximation (for details, see Theorem 1 of Chung et al.[1])

Remarkably, we can now compute the latter term when we have Gaussian noise, as

Moreover, taking the gradient w.r.t. \(\mathbf{x}_t\) can be performed through automatic differentiation.

Let’s see how this can be done in PyTorch. Note that when we are taking the gradient w.r.t. a tensor,

we first have to enable the gradient computation by tensor.requires_grad_()

Note

The DPS algorithm assumes that the images are in the range [-1, 1], whereas standard denoisers usually output images in the range [0, 1]. This is why we rescale the images before applying the steps.

x0 = x_true * 2.0 - 1.0 # [0, 1] -> [-1, 1]

data_fidelity = L2()

# xt ~ q(xt|x0)

t = 200 # choose some arbitrary timestep

at = alphas[t]

sigma_cur = (1 - at).sqrt() / at.sqrt()

xt = x0 + sigma_cur * torch.randn_like(x0)

# DPS

with torch.enable_grad():

# Turn on gradient

xt.requires_grad_()

# normalize to [0, 1], denoise, and rescale to [-1, 1]

x0_t = model(xt / 2 + 0.5, sigma_cur / 2) * 2 - 1

# Log-likelihood

ll = data_fidelity(x0_t, y, physics).sqrt().sum()

# Take gradient w.r.t. xt

grad_ll = torch.autograd.grad(outputs=ll, inputs=xt)[0]

# Visualize

plot(

{

"Ground Truth": x0,

"Noisy": xt,

"Posterior Mean": x0_t,

"Gradient": grad_ll,

}

)

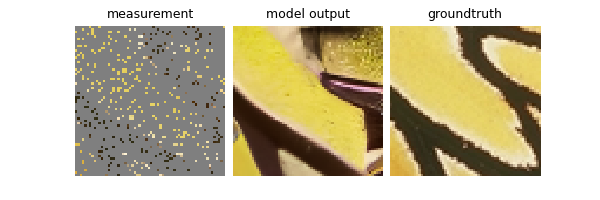

DPS Algorithm#

As we visited all the key components of DPS, we are now ready to define the algorithm. For every denoising timestep, the algorithm iterates the following

Get \(\hat{\mathbf{x}}\) using the denoiser network.

Compute \(\nabla_{\mathbf{x}_t} \log p(\mathbf{y}|\hat{\mathbf{x}}_t)\) through backpropagation.

Perform reverse diffusion sampling with DDPM(IM), corresponding to an update with \(\nabla_{\mathbf{x}_t} \log p(\mathbf{x}_t)\).

Take a gradient step with \(\nabla_{\mathbf{x}_t} \log p(\mathbf{y}|\hat{\mathbf{x}}_t)\).

There are two caveats here. First, in the original work, DPS used DDPM ancestral sampling. As the DDIM sampler Song et al.[2] is a generalization of DDPM in a sense that it retrieves DDPM when \(\eta = 1.0\), here we consider DDIM sampling. One can freely choose the \(\eta\) parameter here, but since we will consider 1000 neural function evaluations (NFEs), it is advisable to keep it \(\eta = 1.0\). Second, when taking the log-likelihood gradient step, the gradient is weighted so that the actual implementation is a static step size times the \(\ell_2\) norm of the residual:

With these in mind, let us solve the inverse problem with DPS!

Note

We only use 200 steps to reduce the computational time of this example. As suggested by the authors of DPS, the

algorithm works best with num_steps = 1000.

num_steps = 200

skip = num_train_timesteps // num_steps

batch_size = 1

eta = 1.0 # DDPM scheme; use eta < 1 for DDIM

# measurement

x0 = x_true * 2.0 - 1.0

# x0 = x_true.clone()

y = physics(x0.to(device))

# initial sample from x_T

x = torch.randn_like(x0)

xs = [x]

x0_preds = []

for t in tqdm(reversed(range(0, num_train_timesteps, skip))):

at = alphas[t]

at_next = alphas[t - skip] if t - skip >= 0 else torch.tensor(1)

# we cannot use bt = betas[t] if skip > 1:

bt = 1 - at / at_next

xt = xs[-1].to(device)

with torch.enable_grad():

xt.requires_grad_()

# 1. denoising step

aux_x = xt / (2 * at.sqrt()) + 0.5 # renormalize in [0, 1]

sigma_cur = (1 - at).sqrt() / at.sqrt() # sigma_t

x0_t = 2 * model(aux_x, sigma_cur / 2) - 1

x0_t = torch.clip(x0_t, -1.0, 1.0) # optional

# 2. likelihood gradient approximation

l2_loss = data_fidelity(x0_t, y, physics).sqrt().sum()

norm_grad = torch.autograd.grad(outputs=l2_loss, inputs=xt)[0]

norm_grad = norm_grad.detach()

sigma_tilde = (bt * (1 - at_next) / (1 - at)).sqrt() * eta

c2 = ((1 - at_next) - sigma_tilde**2).sqrt()

# 3. noise step

epsilon = torch.randn_like(xt)

# 4. DDIM(PM) step

xt_next = (

(at_next.sqrt() - c2 * at.sqrt() / (1 - at).sqrt()) * x0_t

+ sigma_tilde * epsilon

+ c2 * xt / (1 - at).sqrt()

- norm_grad

)

x0_preds.append(x0_t.to("cpu"))

xs.append(xt_next.to("cpu"))

recon = xs[-1]

# plot the results

x = recon / 2 + 0.5

plot(

{

"Measurement": y,

"Model Output": x,

"Ground Truth": x_true,

}

)

0it [00:00, ?it/s]

3it [00:00, 25.15it/s]

6it [00:00, 25.15it/s]

9it [00:00, 24.91it/s]

12it [00:00, 24.04it/s]

15it [00:00, 23.36it/s]

18it [00:00, 22.83it/s]

21it [00:00, 22.21it/s]

24it [00:01, 21.69it/s]

27it [00:01, 21.27it/s]

30it [00:01, 20.95it/s]

33it [00:01, 20.70it/s]

36it [00:01, 20.56it/s]

39it [00:01, 20.44it/s]

42it [00:01, 20.22it/s]

45it [00:02, 20.17it/s]

48it [00:02, 20.10it/s]

51it [00:02, 20.05it/s]

54it [00:02, 20.02it/s]

57it [00:02, 20.00it/s]

60it [00:02, 20.06it/s]

63it [00:02, 20.11it/s]

66it [00:03, 20.10it/s]

69it [00:03, 19.93it/s]

72it [00:03, 19.97it/s]

74it [00:03, 19.95it/s]

76it [00:03, 19.92it/s]

78it [00:03, 19.89it/s]

80it [00:03, 19.88it/s]

82it [00:03, 19.87it/s]

84it [00:04, 19.83it/s]

86it [00:04, 19.77it/s]

88it [00:04, 19.79it/s]

90it [00:04, 19.78it/s]

93it [00:04, 20.00it/s]

95it [00:04, 19.85it/s]

98it [00:04, 19.93it/s]

100it [00:04, 19.90it/s]

102it [00:04, 19.89it/s]

105it [00:05, 20.00it/s]

108it [00:05, 20.02it/s]

110it [00:05, 19.98it/s]

112it [00:05, 19.95it/s]

114it [00:05, 19.94it/s]

117it [00:05, 19.96it/s]

119it [00:05, 19.92it/s]

121it [00:05, 19.91it/s]

123it [00:06, 19.92it/s]

125it [00:06, 19.92it/s]

127it [00:06, 19.93it/s]

129it [00:06, 19.90it/s]

131it [00:06, 19.89it/s]

133it [00:06, 19.91it/s]

135it [00:06, 19.85it/s]

138it [00:06, 19.96it/s]

140it [00:06, 19.95it/s]

142it [00:06, 19.94it/s]

144it [00:07, 19.89it/s]

147it [00:07, 19.95it/s]

149it [00:07, 19.96it/s]

152it [00:07, 20.05it/s]

155it [00:07, 20.11it/s]

158it [00:07, 20.09it/s]

161it [00:07, 20.13it/s]

164it [00:08, 20.12it/s]

167it [00:08, 20.09it/s]

170it [00:08, 20.01it/s]

173it [00:08, 19.97it/s]

175it [00:08, 19.90it/s]

177it [00:08, 19.90it/s]

179it [00:08, 19.90it/s]

181it [00:08, 19.92it/s]

183it [00:09, 19.94it/s]

185it [00:09, 19.91it/s]

187it [00:09, 19.92it/s]

189it [00:09, 19.78it/s]

192it [00:09, 19.93it/s]

195it [00:09, 19.96it/s]

197it [00:09, 19.93it/s]

199it [00:09, 19.87it/s]

200it [00:09, 20.27it/s]

Using DPS in your inverse problem#

You can readily use this algorithm via the deepinv.sampling.DPS class.

y = physics(x)

model = dinv.sampling.DPS(dinv.models.DiffUNet(), data_fidelity=dinv.optim.data_fidelity.L2())

xhat = model(y, physics)

- References:

Total running time of the script: (0 minutes 11.228 seconds)