Note

New to DeepInverse? Get started with the basics with the 5 minute quickstart tutorial..

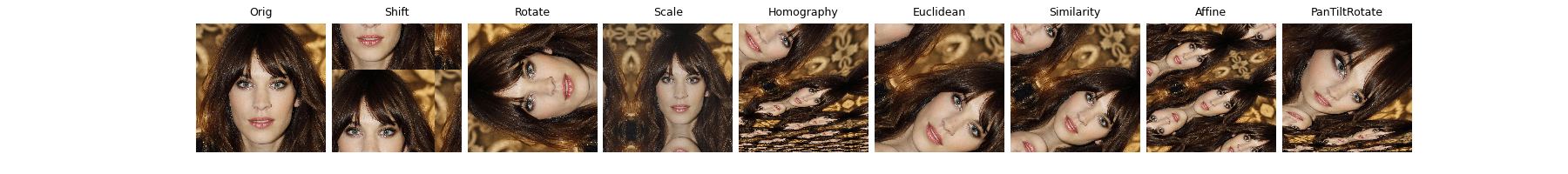

Image transformations for Equivariant Imaging#

This example demonstrates various geometric image transformations

implemented in deepinv that can be used in Equivariant Imaging (EI)

for self-supervised learning:

Shift: integer pixel 2D shift;

Rotate: 2D image rotation;

Scale: continuous 2D image downscaling;

Euclidean: includes continuous translation, rotation, and reflection, forming the group \(\mathbb{E}(2)\);

Similarity: as above but includes scale, forming the group \(\text{S}(2)\);

Affine: as above but includes shear effects, forming the group \(\text{Aff}(3)\);

Homography: as above but includes perspective (i.e pan and tilt) effects, forming the group \(\text{PGL}(3)\);

PanTiltRotate: pure 3D camera rotation i.e pan, tilt and 2D image rotation.

See docs for full list.

These were proposed in the papers:

Shift,Rotate: Chen et al.[1].Scale: Scanvic et al.[2].Homographyand the projective geometry framework: Wang and Davies[3].

import torch

from torch.utils.data import DataLoader, random_split

from torchvision.transforms import Compose, ToTensor, CenterCrop, Resize

import deepinv as dinv

from deepinv.utils import get_data_home

device = dinv.utils.get_device()

ORIGINAL_DATA_DIR = get_data_home() / "Urban100"

Selected GPU 0 with 4094.25 MiB free memory

Define transforms. For the transforms that involve 3D camera rotation

(i.e pan or tilt), we limit theta_max for display.

transforms = [

dinv.transform.Shift(),

dinv.transform.Rotate(),

dinv.transform.Scale(),

dinv.transform.Homography(theta_max=10),

dinv.transform.projective.Euclidean(),

dinv.transform.projective.Similarity(),

dinv.transform.projective.Affine(),

dinv.transform.projective.PanTiltRotate(theta_max=10),

]

/local/jtachell/deepinv/deepinv/deepinv/transform/rotate.py:49: UserWarning: The default interpolation mode will be changed to bilinear interpolation in the near future. Please specify the interpolation mode explicitly if you plan to keep using nearest interpolation.

warn(

Plot transforms on a sample image. Note that, during training, we never

have access to these ground truth images x, only partial and noisy

measurements y.

x = dinv.utils.load_example("celeba_example.jpg")

dinv.utils.plot(

[x] + [t(x) for t in transforms],

["Orig"] + [t.__class__.__name__ for t in transforms],

fontsize=24,

)

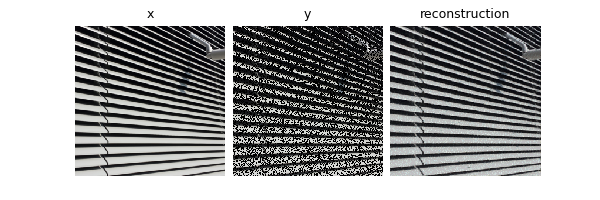

Now, we run an inpainting experiment to reconstruct images from images masked with a random mask, without ground truth, using EI. For this example we use the Urban100 images of natural urban scenes. As these scenes are imaged with a camera free to move and rotate in the world, all of the above transformations are valid invariances that we can impose on the unknown image set \(x\in X\).

dataset = dinv.datasets.Urban100HR(

root=ORIGINAL_DATA_DIR,

download=True,

transform=Compose([ToTensor(), Resize(256), CenterCrop(256)]),

)

train_dataset, test_dataset = random_split(dataset, (0.8, 0.2))

train_dataloader = DataLoader(train_dataset, shuffle=True)

test_dataloader = DataLoader(test_dataset)

# Use physics to generate data online

physics = dinv.physics.Inpainting((3, 256, 256), mask=0.6, device=device)

0%| | 0/135388067 [00:00<?, ?it/s]

7%|▋ | 9.44M/129M [00:00<00:01, 98.5MB/s]

16%|█▌ | 20.2M/129M [00:00<00:01, 107MB/s]

24%|██▍ | 31.1M/129M [00:00<00:00, 110MB/s]

32%|███▏ | 41.9M/129M [00:00<00:00, 111MB/s]

41%|████ | 52.8M/129M [00:00<00:00, 112MB/s]

49%|████▉ | 63.7M/129M [00:00<00:00, 113MB/s]

58%|█████▊ | 74.5M/129M [00:00<00:00, 111MB/s]

66%|██████▌ | 85.1M/129M [00:00<00:00, 109MB/s]

74%|███████▍ | 95.6M/129M [00:00<00:00, 107MB/s]

82%|████████▏ | 106M/129M [00:01<00:00, 107MB/s]

90%|████████▉ | 116M/129M [00:01<00:00, 106MB/s]

98%|█████████▊| 126M/129M [00:01<00:00, 105MB/s]

100%|██████████| 129M/129M [00:01<00:00, 108MB/s]

Extracting: 0%| | 0/101 [00:00<?, ?it/s]

Extracting: 9%|▉ | 9/101 [00:00<00:01, 89.49it/s]

Extracting: 21%|██ | 21/101 [00:00<00:00, 101.01it/s]

Extracting: 33%|███▎ | 33/101 [00:00<00:00, 108.70it/s]

Extracting: 49%|████▊ | 49/101 [00:00<00:00, 124.24it/s]

Extracting: 61%|██████▏ | 62/101 [00:00<00:00, 121.64it/s]

Extracting: 74%|███████▍ | 75/101 [00:00<00:00, 110.83it/s]

Extracting: 86%|████████▌ | 87/101 [00:00<00:00, 112.11it/s]

Extracting: 98%|█████████▊| 99/101 [00:00<00:00, 107.60it/s]

Extracting: 100%|██████████| 101/101 [00:00<00:00, 111.55it/s]

Dataset has been successfully downloaded.

For training, use a small UNet, Adam optimizer, EI loss with homography

transform, and the deepinv.Trainer functionality:

Note

We only train for a single epoch in the demo, but it is recommended to train multiple epochs in practice.

To simulate a realistic self-supervised learning scenario, we do not use any supervised metrics for training, such as PSNR or SSIM, which require clean ground truth images.

Tip

We can use the same self-supervised loss for evaluation, as it does not require clean images,

to monitor the training process (e.g. for early stopping). This is done automatically when metrics=None and early_stop>0 in the trainer.

model = dinv.models.UNet(

in_channels=3, out_channels=3, scales=2, circular_padding=True, batch_norm=False

).to(device)

losses = [

dinv.loss.MCLoss(),

dinv.loss.EILoss(dinv.transform.Homography(theta_max=10, device=device)),

]

optimizer = torch.optim.Adam(model.parameters(), lr=1e-3, weight_decay=1e-8)

model = dinv.Trainer(

model=model,

physics=physics,

online_measurements=True,

train_dataloader=train_dataloader,

eval_dataloader=test_dataloader,

compute_eval_losses=True, # use self-supervised loss for evaluation

early_stop_on_losses=True, # stop using self-supervised eval loss

epochs=1,

losses=losses,

metrics=None, # no supervised metrics

early_stop=2, # we can use early stopping as we have a validation set

optimizer=optimizer,

verbose=True,

show_progress_bar=False,

save_path=None,

device=device,

).train()

/local/jtachell/deepinv/deepinv/deepinv/training/trainer.py:1354: UserWarning: non_blocking_transfers=True but DataLoader.pin_memory=False; set pin_memory=True to overlap host-device copies with compute.

self.setup_train()

The model has 444867 trainable parameters

Train epoch 0: MCLoss=0.008, EILoss=0.027, TotalLoss=0.036

Eval epoch 0: MCLoss=0.007, EILoss=0.025, TotalLoss=0.032

Best model saved at epoch 1

Show results of a pretrained model trained using a larger UNet for 40 epochs:

model = dinv.models.UNet(

in_channels=3, out_channels=3, scales=3, circular_padding=True, batch_norm=False

).to(device)

ckpt = torch.hub.load_state_dict_from_url(

dinv.models.utils.get_weights_url("ei", "Urban100_inpainting_homography_model.pth"),

map_location=device,

)

model.load_state_dict(ckpt["state_dict"])

x = next(iter(train_dataloader))

x = x.to(device)

y = physics(x)

x_hat = model(y)

dinv.utils.plot([x, y, x_hat], ["x", "y", "reconstruction"])

- References:

Total running time of the script: (0 minutes 10.508 seconds)