PanNet#

- class deepinv.models.PanNet(backbone_net=None, hrms_shape=(4, 900, 900), scale_factor=4, highpass_kernel_size=5, device='cpu', **kwargs)[source]#

Bases:

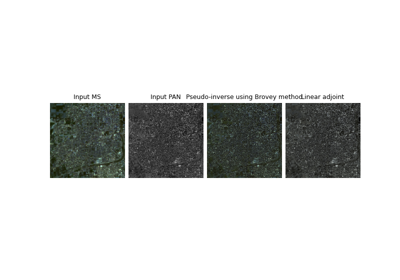

ModulePanNet architecture for pan-sharpening.

PanNet neural network from Yang et al.[1].

Takes input measurements as a

deepinv.utils.TensorListwith elements (MS, PAN), where MS is the low-resolution multispectral image of shape (B, C, H, W) and PAN is the high-resolution panchromatic image of shape (B, 1, H*r, W*r) where r is the pan-sharpening factor.- Parameters:

backbone_net (torch.nn.Module) – Backbone neural network, e.g. ResNet. If

None, defaults to a simple ResNet.hrms_shape (tuple[int]) – shape of high-resolution multispectral images (C,H,W), defaults to (4,900,900)

scale_factor (int) – pansharpening downsampling ratio HR/LR, defaults to 4

highpass_kernel_size (int) – square kernel size for extracting high-frequency features, defaults to 5

device (str) – torch device, defaults to “cpu”

- References:

- create_sampler(direction, hr_shape, noise_gain=0.0)[source]#

Helper function for downsampling/upsampling images (useful for reduced-resolution training with Wald’s protocol).

- forward(y, physics, *args, **kwargs)[source]#

Evaluate the pansharpening model

- Parameters:

y (deepinv.utils.TensorList) – (MS,PAN) images

physics (deepinv.physics.Pansharpen) – Pansharpening operator