EPLL#

- class deepinv.optim.EPLL(GMM=None, n_components=200, pretrained='download', patch_size=6, channels=1, device='cpu')[source]#

Bases:

ModuleExpected Patch Log Likelihood reconstruction method.

Reconstruction method based on the minimization problem

\[\underset{x}{\arg\min} \; \|y-Ax\|^2 - \sum_i \log p(P_ix)\]where the first term is a standard \(\ell_2\) data-fidelity, and the second term represents a patch prior via Gaussian mixture models, where \(P_i\) is a patch operator that extracts the ith (overlapping) patch from the image.

The reconstruction function is based on the approximated half-quadratic splitting method as in Zoran and Weiss [131].

- Parameters:

GMM (None, deepinv.optim.utils.GaussianMixtureModel) – Gaussian mixture defining the distribution on the patch space.

Nonecreates a GMM with n_components components of dimension accordingly to the arguments patch_size and channels.n_components (int) – number of components of the generated GMM if GMM is

None.pretrained (str, None) – Path to pretrained weights of the GMM with file ending

.pt. None for no pretrained weights,"download"for pretrained weights on the BSDS500 dataset,"GMM_lodopab_small"for the weights from the limited-angle CT example. See pretrained-weights for more details.patch_size (int) – patch size.

channels (int) – number of color channels (e.g. 1 for gray-valued images and 3 for RGB images)

device (str, torch.device) – defines device (

cpuorcuda)

- forward(y, physics, sigma=None, x_init=None, betas=None, batch_size=-1)[source]#

Approximated half-quadratic splitting method for image reconstruction as proposed by Zoran and Weiss.

- Parameters:

y (torch.Tensor) – tensor of observations. Shape: batch size x …

x_init (torch.Tensor, None) – tensor of initializations. If

Noneuses initializes with the adjoint of the forward operator. Shape: batch size x channels x height x widthphysics (deepinv.physics.LinearPhysics) – Forward linear operator.

betas (list[float]) – parameters from the half-quadratic splitting.

Noneuses the standard choice[1,4,8,16,32]/sigma_sqbatch_size (int) – batching the patch estimations for large images. No effect on the output, but a small value reduces the memory consumption but might increase the computation time. -1 for considering all patches at once.

- negative_log_likelihood(x)[source]#

Takes patches and returns the negative log likelihood of the GMM for each patch.

- Parameters:

x (torch.Tensor) – tensor of patches of shape batch_size x number of patches per batch x patch_dimensions

Examples using EPLL:#

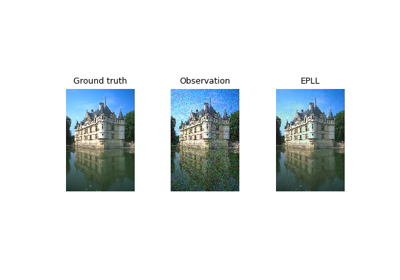

Expected Patch Log Likelihood (EPLL) for Denoising and Inpainting

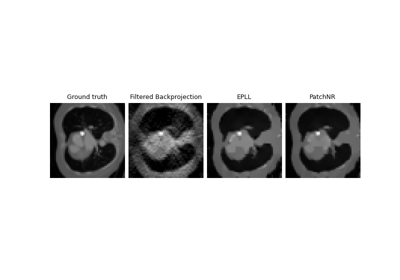

Patch priors for limited-angle computed tomography