DeepImagePrior#

- class deepinv.models.DeepImagePrior(generator, img_size, iterations=2500, learning_rate=1e-2, verbose=False, re_init=False)[source]#

Bases:

ReconstructorDeep Image Prior reconstruction.

This method, introduced by Ulyanov et al.[1], reconstructs an image by minimizing the loss function

\[\min_{\theta} \|y-AG_{\theta}(z)\|^2\]where \(z\) is a random input and \(G_{\theta}\) is a convolutional decoder network with parameters \(\theta\). The minimization should be stopped early to avoid overfitting. The method uses the Adam optimizer.

Note

This method only works with certain convolutional decoder networks. We recommend using the network

deepinv.models.ConvDecoder.Note

The number of iterations and learning rate are set to the values used in the original paper. However, these values may not be optimal for all problems. We recommend experimenting with different values.

- Parameters:

generator (torch.nn.Module) – Convolutional decoder network.

img_size (list, tuple) – Size

(C,H,W)of the input noise vector \(z\).iterations (int) – Number of optimization iterations.

learning_rate (float) – Learning rate of the Adam optimizer.

verbose (bool) – If

True, print progress.re_init (bool) – If

True, re-initialize the network parameters before each reconstruction.

- References:

- forward(y, physics, **kwargs)[source]#

Reconstruct an image from the measurement \(y\). The reconstruction is performed by solving a minimization problem.

Warning

The optimization is run for every test batch. Thus, this method can be slow when tested on a large number of test batches.

- Parameters:

y (torch.Tensor) – Measurement.

physics (torch.Tensor) – Physics model.

Examples using DeepImagePrior:#

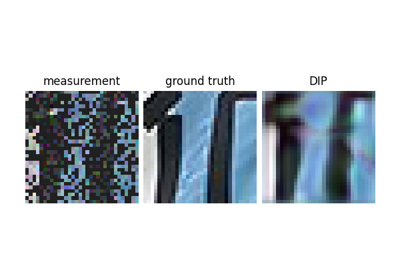

Reconstructing an image using the deep image prior.