NoisyDataFidelity#

- class deepinv.sampling.NoisyDataFidelity(d=None, weight=1.0, *args, **kwargs)[source]#

Bases:

DataFidelityPreconditioned data fidelity term for noisy data \(- \log p(y|x + \sigma(t) \omega)\) with \(\omega\sim\mathcal{N}(0,\mathrm{I})\).

This is a base class for the conditional classes for approximating \(\log p_t(y|x_t)\) used in diffusion algorithms for inverse problems, in

deepinv.sampling.PosteriorDiffusion.It comes with a

.gradmethod computing the score \(\nabla_{x_t} \log p_t(y|x_t)\).By default we have

\[\begin{equation*} \nabla_{x_t} \log p(y|x + \sigma(t) \omega) = P(\forw{x_t'}-y), \end{equation*}\]where \(P\) is a preconditioner and \(x_t'\) is an estimation of the image \(x\). By default, \(P\) is defined as \(A^\top\), \(x_t' = x_t\) and this class matches the

deepinv.optim.DataFidelityclass.- Parameters:

d (deepinv.optim.Distance) – Distance metric to use for the data fidelity term. Default to

deepinv.optim.L2Distance.weight (float) – Weighting factor for the data fidelity term. Default to 1.

- diff(x, y, physics, *args, **kwargs)[source]#

Computes the difference \(A(x) - y\) between the forward operator applied to the current iterate and the input data.

- Parameters:

x (torch.Tensor) – Current iterate.

y (torch.Tensor) – Input data.

- Returns:

(torch.Tensor) difference between the forward operator applied to the current iterate and the input data.

- Return type:

- forward(x, y, physics, *args, **kwargs)[source]#

Computes the data-fidelity term.

- Parameters:

x (torch.Tensor) – input image

y (torch.Tensor) – measurements

physics (deepinv.physics.Physics) – forward operator

- Returns:

(torch.Tensor) loss term.

- Return type:

- grad(x, y, physics, *args, **kwargs)[source]#

Computes the gradient of the data-fidelity term.

- Parameters:

x (torch.Tensor) – Current iterate.

y (torch.Tensor) – Input data.

physics (deepinv.physics.Physics) – physics model

- Returns:

(torch.Tensor) data-fidelity term.

- Return type:

- precond(u, physics, *args, **kwargs)[source]#

The preconditioner \(P\) for the data fidelity term. Default to \(A^{\top}\).

- Parameters:

u (torch.Tensor) – input tensor.

physics (deepinv.physics.Physics) – physics model.

- Returns:

(torch.Tensor) preconditionned tensor \(P(u)\).

- Return type:

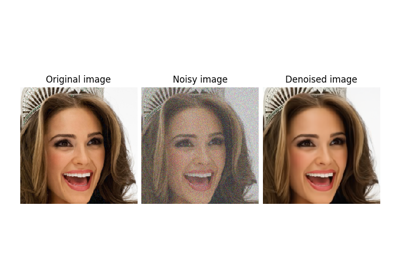

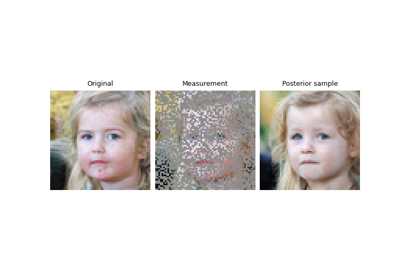

Examples using NoisyDataFidelity:#

Using state-of-the-art diffusion models from HuggingFace Diffusers with DeepInverse

Building your diffusion posterior sampling method using SDEs