SpaceVaryingBlur#

- class deepinv.physics.SpaceVaryingBlur(filters=None, multipliers=None, padding='valid', device='cpu', **kwargs)[source]#

Bases:

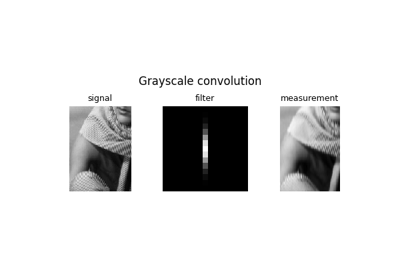

LinearPhysicsSpace varying blur via product-convolution.

This linear operator performs

\[y = \sum_{k=1}^K h_k \star (w_k \odot x)\]where \(\star\) is a convolution, \(\odot\) is a Hadamard product, \(w_k\) are multipliers \(h_k\) are filters.

- Parameters:

filters (torch.Tensor) – Filters \(h_k\). Tensor of size

(B, C, K, h, w)whereBis the batch size,Cthe number of channels,Kthe number of filters,handwthe filter height and width which should be smaller or equal than the image \(x\) height and width respectively.multipliers (torch.Tensor) – Multipliers \(w_k\). Tensor of size

(B, C, K, H, W)whereBis the batch size,Cthe number of channels,Kthe number of multipliers,HandWthe image \(x\) height and width.padding (str) – options =

'valid','circular','replicate','reflect'. Ifpadding = 'valid'the blurred output is smaller than the image (no padding), otherwise the blurred output has the same size as the image.device (torch.device, str) – Device on which the physics’ buffers will be created. If a buffer is updated via

physics.update_parameters(), if not None, it will be automatically casted to the device of the replaced buffer, else, use the device of the provided value. To change the device of all buffers, please usephysics.to(device).

- Examples:

We show how to instantiate a spatially varying blur operator.

>>> from deepinv.physics.generator import DiffractionBlurGenerator, ProductConvolutionBlurGenerator >>> from deepinv.physics.blur import SpaceVaryingBlur >>> from deepinv.utils.plotting import plot >>> psf_size = 32 >>> img_size = (256, 256) >>> delta = 16 >>> psf_generator = DiffractionBlurGenerator((psf_size, psf_size)) >>> pc_generator = ProductConvolutionBlurGenerator(psf_generator=psf_generator, img_size=img_size) >>> params_pc = pc_generator.step(1) >>> physics = SpaceVaryingBlur(**params_pc) >>> dirac_comb = torch.zeros(img_size).unsqueeze(0).unsqueeze(0) >>> dirac_comb[0,0,::delta,::delta] = 1 >>> psf_grid = physics(dirac_comb) >>> plot(psf_grid, titles="Space varying impulse responses")

- A(x, filters=None, multipliers=None, padding=None, **kwargs)[source]#

Applies the space varying blur operator to the input image.

It can receive new parameters \(w_k\), \(h_k\) and padding to be used in the forward operator, and stored as the current parameters.

- Parameters:

filters (torch.Tensor) – Filters \(h_k\). Tensor of size (b, c, K, h, w). \(b \in \{1, B\}\) and \(c \in \{1, C\}\), \(h\leq H\) and \(w\leq W\).

multipliers (torch.Tensor) – Multipliers \(w_k\). Tensor of size (b, c, K, H, W). \(b \in \{1, B\}\) and \(c \in \{1, C\}\)

padding – options =

'valid','circular','replicate','reflect'. Ifpadding = 'valid'the blurred output is smaller than the image (no padding), otherwise the blurred output has the same size as the image.device (str) – cpu or cuda

- A_adjoint(y, filters=None, multipliers=None, padding=None, **kwargs)[source]#

Applies the adjoint operator.

It can receive new parameters \(w_k\), \(h_k\) and padding to be used in the forward operator, and stored as the current parameters.

- Parameters:

filters (torch.Tensor) – Filters \(h_k\). Tensor of size (b, c, K, h, w). \(b \in \{1, B\}\) and \(c \in \{1, C\}\), \(h\leq H\) and \(w\leq W\).

multipliers (torch.Tensor) – Multipliers \(w_k\). Tensor of size (b, c, K, H, W). \(b \in \{1, B\}\) and \(c \in \{1, C\}\)

padding (str) – options =

'valid','circular','replicate','reflect'. Ifpadding = 'valid'the blurred output is smaller than the image (no padding), otherwise the blurred output has the same size as the image.

- update_parameters(filters=None, multipliers=None, padding=None, **kwargs)[source]#

Updates the current parameters.

- Parameters:

filters (torch.Tensor) – Filters \(h_k\). Tensor of size (b, c, K, h, w). \(b \in \{1, B\}\) and \(c \in \{1, C\}\), \(h\leq H\) and \(w\leq W\).

multipliers (torch.Tensor) – Multipliers \(w_k\). Tensor of size (b, c, K, H, W). \(b \in \{1, B\}\) and \(c \in \{1, C\}\)

padding (str) – options =

'valid','circular','replicate','reflect'.