PatchNR#

- class deepinv.optim.PatchNR(normalizing_flow=None, pretrained=None, patch_size=6, channels=1, num_layers=5, sub_net_size=256, device='cpu')[source]#

Bases:

PriorPatch prior via normalizing flows.

The forward method evaluates its negative log likelihood.

- Parameters:

normalizing_flow (torch.nn.Module) – describes the normalizing flow of the model. Generally it can be any

torch.nn.Modulesupporting backpropagation. It takes a (batched) tensor of flattened patches and the boolean rev (defaultFalse) as input and provides the value and the log-determinant of the Jacobian of the normalizing flow as an output Ifrev=True, it considers the inverse of the normalizing flow. When set toNoneit is set to a dense invertible neural network built with the FrEIA library, where the number of invertible blocks and the size of the subnetworks is determined by the parametersnum_layersandsub_net_size.pretrained (str) – Define pretrained weights by its path to a

.ptfile, None for random initialization,"PatchNR_lodopab_small"for the weights from the limited-angle CT example.patch_size (int) – size of patches

channels (int) – number of channels for the underlying images/patches.

num_layers (int) – defines the number of blocks of the generated normalizing flow if

normalizing_flowisNone.sub_net_size (int) – defines the number of hidden neurons in the subnetworks of the generated normalizing flow if

normalizing_flowisNone.device (str) – used device

Note

This class requires the

FrEIApackage to be installed. Install withpip install FrEIA.- fn(x, *args, **kwargs)[source]#

Evaluates the negative log likelihood function of th PatchNR.

- Parameters:

x (torch.Tensor) – image tensor

Examples using PatchNR:#

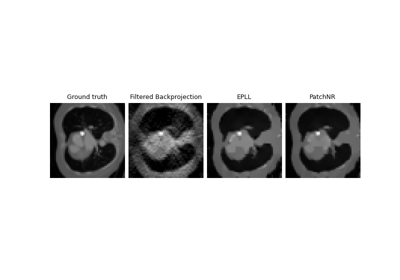

Patch priors for limited-angle computed tomography