DiffusersDenoiserWrapper#

- class deepinv.models.DiffusersDenoiserWrapper(mode_id=None, clip_output=True, dtype=torch.float32, device='cpu', *args, **kwargs)[source]#

Bases:

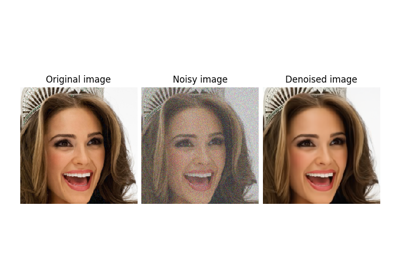

ScoreModelWrapperWraps a HuggingFace diffusers model as a DeepInv Denoiser.

- Parameters:

mode_id (str) – Diffusers model id or HuggingFace hub repository id. For example, ‘google/ddpm-cat-256’. The id must work with

DiffusionPipeline. See Diffusers Documentation.clip_output (bool) – Whether to clip the output to the model range. Default is

True.device (str | torch.device) – Device to load the model on. Default is ‘cpu’.

Note

Currently, only models trained with

DDPMScheduler,DDIMSchedulerorPNDMSchedulerare supported.Warning

This wrapper requires the

diffusersandtransformerspackages. You can install them viapip install diffusers transformers.

- Examples:

>>> import deepinv as dinv >>> from deepinv.models import DiffusersDenoiserWrapper >>> import torch >>> device = dinv.utils.get_device(verbose=False) >>> denoiser = DiffusersDenoiserWrapper(mode_id='google/ddpm-cat-256', device=device) >>> x = dinv.utils.load_example( ... "cat.jpg", ... img_size=256, ... resize_mode="resize", ... ).to(device)

>>> sigma = 0.1 >>> x_noisy = x + sigma * torch.randn_like(x) >>> with torch.no_grad(): ... x_denoised = denoiser(x_noisy, sigma=sigma)

- forward(x, sigma=None, *args, **kwargs)[source]#

Applies denoiser \(\denoiser{x}{\sigma}\). The input

xis expected to be in[0, 1]range (up to random noise) and the output is also in[0, 1]range.- Parameters:

x (torch.Tensor) – noisy input, of shape

[B, C, H, W].sigma (torch.Tensor, float) – noise level. Can be a

floator atorch.Tensorof shape[B]. If a singlefloatis provided, the same noise level is used for all samples in the batch. Otherwise, batch-wise noise levels are used.args – additional positional arguments to be passed to the model.

kwarg – additional keyword arguments to be passed to the model. For example, a

promptfor text-conditioned orclass_labelfor class-conditioned models.

- Returns:

(

torch.Tensor) the denoised output.- Return type:

Examples using DiffusersDenoiserWrapper:#

Using state-of-the-art diffusion models from HuggingFace Diffusers with DeepInverse