DistributedProcessing#

- class deepinv.distributed.DistributedProcessing(ctx, processor, *, strategy=None, strategy_kwargs=None, max_batch_size=None, **kwargs)[source]#

Bases:

objectDistributed signal processing using pluggable tiling and reduction strategies.

This class enables distributed processing of large signals (images, volumes, etc.) by:

Splitting the signal into patches using a chosen strategy

Distributing patches across multiple processes/GPUs

Processing each patch independently using a provided processor function

Combining processed patches back into the full signal with proper overlap handling

The processor can be any callable that operates on tensors (e.g., denoisers, priors, neural networks, etc.). The class handles all distributed coordination automatically.

Example use cases:

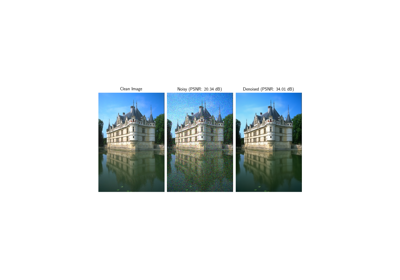

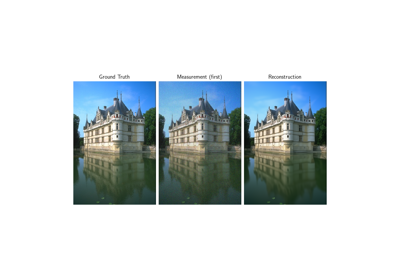

Distributed denoising of large images/volumes

Applying neural network priors across multiple GPUs

Processing signals too large to fit on a single device

- Parameters:

ctx (DistributedContext) – distributed context manager.

processor (Callable[[torch.Tensor], torch.Tensor]) – processing function to apply to signal patches. Should accept a batched tensor of shape

(N, C, ...)and return a tensor of the same shape. Examples: denoiser, neural network, prior gradient function, etc.strategy (str | DistributedSignalStrategy | None) – signal processing strategy for patch extraction and reduction. Either a strategy name (

'basic','overlap_tiling') or a custom strategy instance. Default is'overlap_tiling'which handles overlapping patches with smooth blending.strategy_kwargs (dict | None) – additional keyword arguments passed to the strategy constructor when using string strategy names. Examples:

patch_size,overlap,tiling_dims. Default isNone.max_batch_size (int | None) – maximum number of patches to process in a single batch. If

None, all local patches are batched together. Set to1for sequential processing (useful for memory-constrained scenarios). Higher values increase throughput but require more memory. Default isNone.