WaveletNoiseEstimator#

- class deepinv.models.WaveletNoiseEstimator[source]#

Bases:

ModuleWavelet Gaussian noise level estimator.

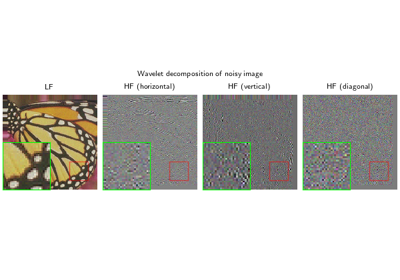

This estimator was proposed in Donoho and Johnstone[1]. It estimates the standard deviation of a Gaussian noise corrupted image. More precisely, given a noisy image \(y = x + n\) where \(n \sim \mathcal{N}(0, \sigma^2)\), the noise level estimator computes an estimate of \(\sigma\) as

\[\hat{\sigma} = \frac{\text{median}(|w|)}{0.6745}\]where \(w\) are the wavelet coefficients of the noisy image \(y\) at the first level of decomposition.

Note

As noted by the authors, this estimator is an upper bound on the noise level, and may overestimate the true noise level in some cases, in particular if the SNR is high (i.e., the noise level is low compared to the signal level). In such cases, the estimator may be less accurate than the

PatchCovarianceNoiseEstimatorwhich is based on the eigenvalues of the covariance matrix of image patches.Warning

This estimator assumes that the noise in the corrupted image follows a Gaussian distribution. It may not perform well if the noise distribution deviates significantly from Gaussian, or if the image contains strong edges or textures that can affect the wavelet coefficients.

Warning

This model requires Pytorch Wavelets (

ptwt) to be installed. It can be installed withpip install ptwt.

- Examples:

>>> import deepinv as dinv >>> from deepinv.models import WaveletNoiseEstimator >>> rng = torch.Generator('cpu').manual_seed(0) >>> noise = dinv.physics.GaussianNoise(0.1, rng=rng)(torch.zeros(1, 1, 256, 256)) >>> noise_estimator = WaveletNoiseEstimator() >>> sigma_est = noise_estimator(noise) >>> print(sigma_est) tensor([0.1003])

- References:

- static estimate_noise(x)[source]#

Estimates noise level in image im.

- Parameters:

x (torch.Tensor) – input image

- Returns:

(

torch.Tensor) estimated noise level- Return type:

- forward(x)[source]#

Forward pass.

- Parameters:

x (torch.Tensor) – input image

- Returns:

(:class:

torch.Tensor) estimated noise level- Return type: